This article summarises research undertaken by Mike Gilmore (during his time working for the FCA), Deanna Karapetyan, Gráinne Murphy, Cherryl Ng and Jackie Spang.

We’ve all received at least 1 email from our pension provider that we’ve ignored or promised ourselves we’ll go back to later. You tell yourself that retirement is far away or you’re sure it’s all in hand. But the reality is that many consumers approaching retirement have not engaged with their pension or how they will access it. Our Financial Lives survey 2022 suggests over half (52%) of defined contribution (DC) pension holders aged 45 and over have a clear plan for how to take the money built up in their pension. A further 14% did not even know that they need to make a decision on how to take their pension pots (also known as decumulation) and 34% of DC pension holders aged 45 and over reported that they do not understand their decumulation options.

There’s clearly more to be done to support consumers throughout their pensions journey.

Under the new Consumer Duty, firms need to consider the most appropriate way of supporting their customers by equipping them to make effective, timely and properly-informed decisions. Firms must tailor communications to meet the needs of the customers they are intended for - and test and monitor that they are doing so.

Underpinned by these Consumer Duty obligations, we are working with industry to run field trials to explore effective touchpoints for engaging consumers with their pension, with a particular focus on decumulation. Given there are many ways and times to try to engage consumers, we want to add to the sum of knowledge in this area through our trials, rather than developing a specific way to engage consumers. Firms will be able to use our evidence as part of a wider piece to build communication plans that benefit consumers, especially on the importance of testing messaging.

However, messaging alone will not result in the best outcomes for consumers. Effective communications can support consumer understanding and enable good decision-making. But it relies on other regulatory levers to achieve its intended outcome; an example of this in pensions is the development of pensions dashboards.

Our field trial will test behaviourally informed messages, sent at different touchpoints, to understand the most effective times to engage consumers about their pension. Our proxy for engagement will be whether consumers opt to click through to the guidance.

What we found works

Before our field trial, we conducted online testing to find out which messages landed with consumers. Through 3 online experiments and 1 qualitative study, we have shown how behavioural language can improve email open rates, and affect consumer understanding. We found that clear email formatting and addressing present bias and overconfidence were effective at engaging consumers. We showed the importance of pre-testing and using feedback to redesign our treatment emails, after discovering our first messages had undesired effects (backfiring).

Why we all ignore emails we need to action

We all face various behavioural barriers when engaging with our pensions. The key barriers we found that affected consumer’s willingness to seek guidance on their pension were:

- Inertia – an unwillingness to act

- Present bias – placing a lower value on future outcomes

- Overconfidence – overestimating your own ability or knowledge on a subject

- Mistrust – dismissing relevant information because of a lack of trust in the source

- Information overload – feeling overwhelmed with how much information there is to digest, so avoiding it entirely

Our subject lines: Enticing you to open

Our first experiment was designed to capture the effect of the subject lines on participants opening the emails, and the effect of email design on participants clicking the email’s call to action. The experiment used a mock email inbox to replicate the consumer experience in a controlled environment (see Figure 1). Participants were asked to engage with the inbox as if it were their own. They were shown a range of different email subject lines, designed to simulate a typical inbox. One of these was our test pension subject line. If participants opened the pension email, they were then shown one of our test emails. These had a clickable call-to-action, and we recorded the participants interaction with this.

We show the subject lines we tested in the table below.

|

Subject line name |

Subject line |

Barrier tackled |

Explanation |

|---|---|---|---|

|

Control |

Get free support from MoneyHelper |

None |

A clear and to the point subject line, aimed to be used as a comparison for the treatments. |

|

Few more steps |

Only a few more steps until you’re retirement ready |

Information overload |

Uses a foot-in-the-door technique to reduce the perceived burden of pensions. |

|

Future you |

The future you will thank you for this |

Present bias |

Makes the recipient place more value on future outcomes. |

|

Key questions |

Can you answer key questions about your retirement plans? |

Overconfidence |

Makes the recipient think critically about their own plans. |

|

Take income |

How will you take your retirement income? |

Overconfidence/ present bias |

Makes the recipient relate to their pension in a present-day framing of income. |

Our emails: Ensuring you read on

We tested different types of emails (ie treatment emails) to encourage recipients to seek guidance from MoneyHelper, a free Government-backed guidance service.

While our control email was plain and neutral in its framing of the service, our treatment emails were designed to tackle specific behavioural barriers. We explored tackling barriers by using 4 different treatment emails containing behavioural messaging, eg social norms, to tackle the barriers above.

- The head start email was designed to tackle information avoidance/overload, inertia and present bias. The email featured a timeline that showed that the individual was already on the path to pension planning and to show that they’re closer than they believe. We also provided some information about their pensions but kept this short and concise.

- The specific questions email was designed to tackle overconfidence. Three questions featured at the top of the email to make the participant think about their future and be critical of any overconfidence they have.

- The present bias email was designed to tackle present bias and overconfidence. Two critical questions were asked: one about their lifestyle, and the other about their income. This is to challenge the assumption that just because they have the right resources and planning for their life today, they will also do so in the future.

- The social norms email was designed to tackle mistrust and overconfidence. By showing that most people seek help when making retirement plans, it normalises it and makes people more willing to engage.

Can we nudge a click?

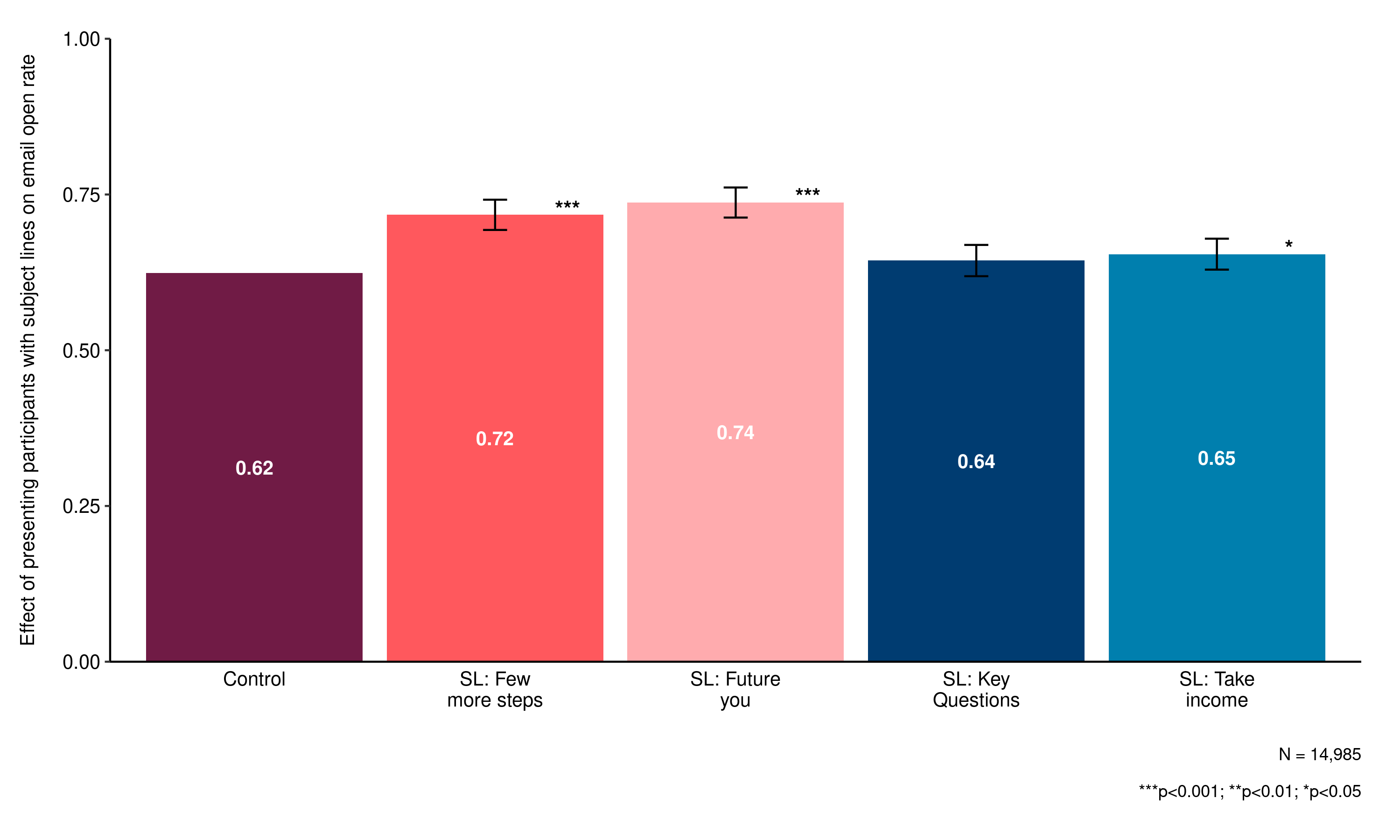

Our subject lines were enough to pique the interest of some participants. We made the largest impact by addressing the present bias in consumers. The future you and few more steps subject lines performed better than the control open rate (62%), by 12 and 10 percentage points respectively.

However, we didn’t see an uplift in the click-through rate. The control email saw 15% of participants clicking the call-to-action in the email, but this was unchanged among our treatment emails. Where our behavioural wording of subject lines worked as intended, our emails had not.

Just because you open an email, doesn’t mean you’ve read it

Our second experiment tested the effect of our treatment emails on comprehension and attitudes towards seeking MoneyHelper guidance. Participants were randomised to see 1 of our 4 treatment emails or the control email. They were then asked to answer questions about their understanding of decumulation and the MoneyHelper service. We were also interested in understanding if the treatment emails affected the participants’ attitudes towards guidance, in terms of their:

- likelihood to ever use MoneyHelper

- likelihood to use MoneyHelper in the next 12 months

- how helpful they think MoneyHelper would be

- how much they would trust MoneyHelper

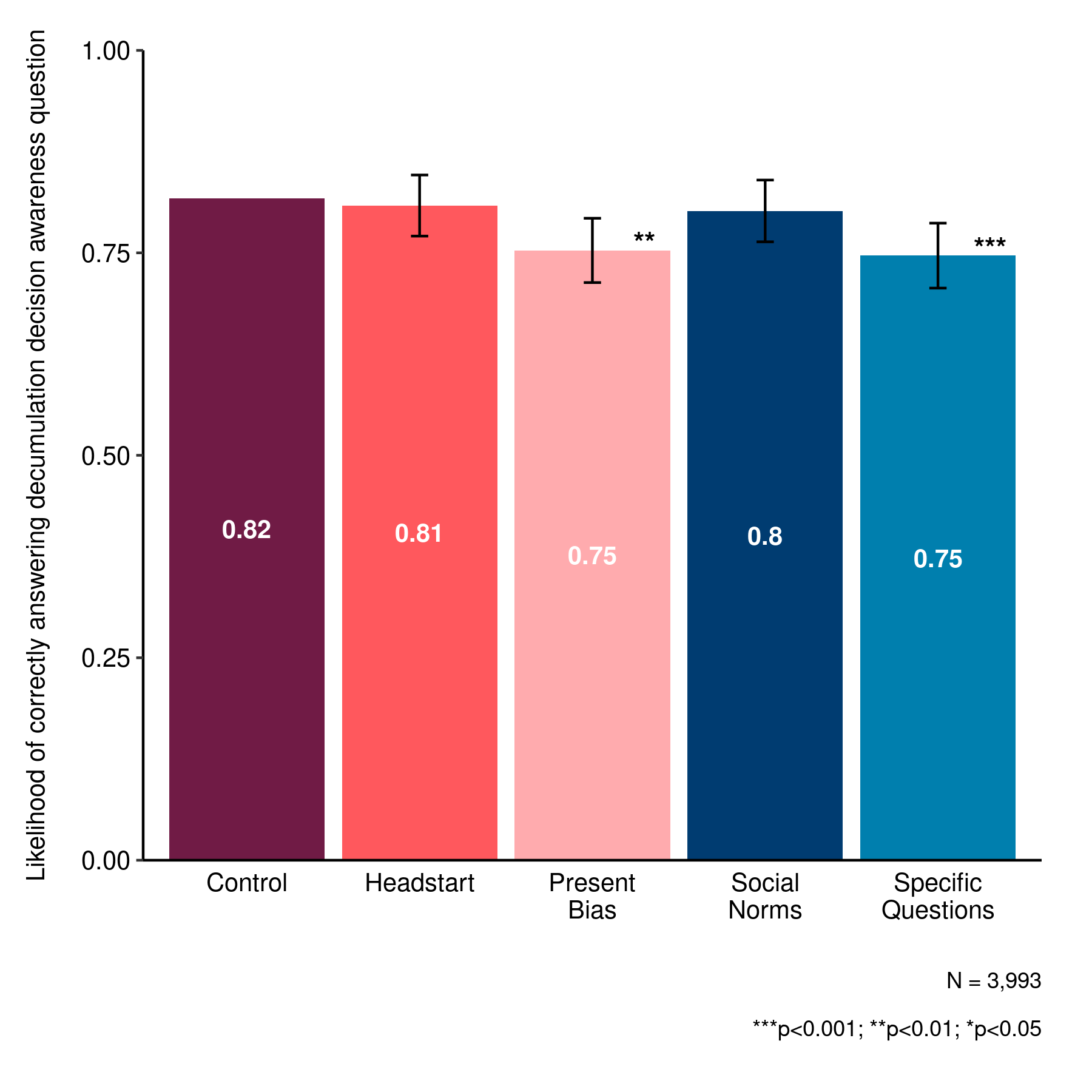

While respondents that saw the control email got the decumulation question right 81% of the time, all 4 of the treatment emails perform between 15 and 20 percentage points worse. The treatment emails also seemed to negatively affect participants’ attitudes towards MoneyHelper. Participants who saw the treatments emails were less likely to say they’d use MoneyHelper, find it helpful or trustworthy.

Unexpected results show the importance of pre-testing

Looking at the results from experiment 1 and 2, our treatment emails had backfired. To understand why this had happened, we reviewed the qualitative comments from the participants across both studies. Several participants felt that our colourful email, designed to standout, looked like it was spam or a scam. Many people switch off or lose trust if an email looks like marketing.

Despite this, in experiment 1 the treatment click-through rates were equal to the control; they did not perform worse as might be expected. So we hypothesise that any positive effect from the behavioural messaging may have been offsetting the negative effects of the design.

Using this evidence, we decided to redesign our treatment emails in the style of our control, which had performed strongly. We kept the emails plain and neutral, while maintaining the behavioural messaging to try and boost engagement. Since we received unexpected results from our first 2 experiments, we pre-tested our adapted treatment emails with qualitative testing.

We tested the new designs with 80 participants, who had a generally positive response to the content and found that it helped to foster an interest in MoneyHelper. Some respondents liked that it was Government-backed, impartial – and free. Stressing the word ‘free’ earlier in the email generated more positive responses.

Satisfied that our redesign was less likely to backfire based on the qualitative results, we ran 1 final online experiment to pre-test our treatment emails before the field trial. We repeated our second experiment with a new group of participants so we could compare comprehension and attitudes to see if they improved.

Using social norms is an effective way to engage consumers

Overall, our new treatment emails performed stronger than our earlier drafts. The control email once again performed strongly. However, our social norms and head start treatment emails performed as well as the control. This was a significant improvement on our previous edits. Our new treatment emails also gave participants a more positive attitude towards MoneyHelper overall. The head start and specific questions emails led to a higher proportion of participants saying they would visit the MoneyHelper website in the next 12 months. Despite this, both treatments led to a lower understanding of what MoneyHelper is.

Taking both comprehension and attitudinal scores into account, using social norms messaging was the most effective treatment email overall. Based on our hypothesis that the behavioural messaging may have been offsetting the negative impact of the previous designs on the click-through rate in experiment 1, we wanted to test the effect of behavioural messaging in the field. So, we decided to take the strongest performing behaviourally informed message - the social norms email - through to the field trial.

Next steps – our field trial

Using what we’ve learnt from these online trials, we’re now testing this with consumers in a real-world setting. We are working with pension firms to use our social norm email to identify effective touchpoints to engage consumers with their pension.

The backfire effect of the earlier drafts of our treatment emails shows the importance of pre-testing interventions to hone them before launching in the field. Our series of online pre-tests allowed us to test different behavioural framings that have shown to be effective from behavioural literature, in the specific context of pension decumulation, to give new insights into what works for consumers thinking about seeking pension guidance. We will build on this evidence base with our field trial with industry.